I’ve recently spoken with several venture-backed enterprise startups which are performing below target, where not so long ago they put up strong numbers. The specifics vary but the symptoms overlap: missing a quarterly sales target, high customer churn, declining NPS, low usage of new features, etc.

The topic of discussion: how do we get the business back on track?

The answer depends on the degree of product/market fit. For an underperforming enterprise product, recently launched, it may be necessary to go backward in order to go forward. The problems the product solves may not be so painful after all, and the best next step may be to repeat the process of customer discovery.

But for the startups I’ve spoken with recently, it appears the poor performance is a symptom of early success, of growing beyond an early market of enthusiasts and earlyvangelists. As the target market has evolved, product/market fit has been eroded. Use cases, technical sophistication, and risk tolerance change as the target customer expands from a relatively small group of “best and brightest” specialists to the broad base of junior people in mainstream enterprises.

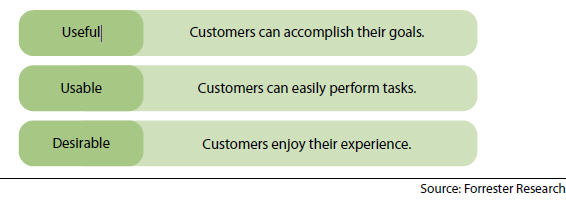

The product is less useful and usable for these “new” customers than the “old” customers. Competing products evolve too, and can set a higher benchmark for usefulness and usability.

For these companies, I focus on improving user efficiency, task completion rates, and reducing risk. These improvements make the product more useful (“it does what I need it to do”) and usable (“I can successfully complete a task with it”). They can require changes to the core product — updating workflows, removing (“killing”) features — or to the whole product more broadly — data protection, legacy system integration, and so forth.

Problem discovery testing

To find the problems underlying the poor performance, I’ve begun testing products with a usability technique called problem discovery (as distinct from Steve Blank’s use of the same term) to uncover deficits in how useful and usable the product is.

I begin problem discovery sessions by interviewing each participant. First, I elicit the participant’s own goals when using the product, rather than testing the tasks we “inside” the company think the product should be used for. To understand the goals’ relative importance, I ask each participant to pick the three most important ones from her informal list of all goals. I get details of recent experiences completing those top three goals, or even better, reasons for completing them right now. And I ask how many times she’s used the product to complete each of those tasks in a recent time interval (eg, week, month). Later, using each importance rating and frequency, I calculate an aggregate “importance” score to identify the most important tasks across all participants.

Then it’s “show me” time. I ask participants, while thinking aloud, to complete each of their top three tasks with the product, to achieve a recent or immediate goal. I largely let them complete the task uninterrupted, but I do check in with them to get additional perspective when they’re happy, frustrated, stymied by a mistake, etc. Reviewing the recordings of these tasks later, I’m able to identify issues which impeded each of the tasks, record their frequency and impact, and likely causes.

Finally, I ask participants to rate how easy or how difficult each task was using a seven-point Likert scale called the Single Ease Question (SEQ).

The outputs are invaluable for identifying and prioritizing changes to make the product more useful and usable:

- top tasks or jobs the participants want to complete with the product;

- ease of use ratings for each of those tasks; and

- top issues impeding each of the most important tasks and their causes.

Competitive products can be tested in the same manner to reveal insights into how well they’ve adjusted to the market’s evolving demands.

Where does it hurt?

It’s essential to recruit participants who are representative of customers in underperforming markets. Participants may come from the current customer base, prospects in new target markets, or both, depending on the situation.

Beyond the essential requirement that participants have the problem your product solves, they should also share key attributes of the target customer where your product has been underperforming:

- exhibiting problematic behavior (churn, reduced usage, etc)

- target markets where sales lag (vertical market, company size, skillset or experience, etc)

- relationship (current customer, prospect, competitor’s customer)

My experience is 5 participants per distinct customer segment is sufficient to identify the critical issues, which is the generally accepted baseline for usability testing. But the most reliable estimate of required participation will be based on the number of issues discovered with an initial set of participants, using the binomial probability formula 1-(1-p)^n.

You may want to process or combine these insights with other inputs before backlog prioritization. For example, the list of most important tasks can be statistically validated with a customer survey, and weighted with business value.

Evolve or die

As enterprise technology markets evolve and mature, the customer requirements evolve, too. Products that were useful and usable to earlier generations of enthusiast and earlyvangelist customers begin underperforming when they reach the broad base of junior people in mainstream enterprises. If you suspect your product is losing its product/market fit, I urge you to test the technique I’ve described here.

Related Posts

Sales Win Loss Analysis: Why Data-Driven Leaders Are Leveling Up

Sales win loss analysis helps leaders make data-driven decisions. What’s working and where can we double-down? What isn’t working, and how do we fix it?

Win/Loss Analysis Reports: 3 Things You’ll Learn

Which aspects of your product offering and buying experience are helping drive sales? And which aspects are hindering sales? This is what enterprise and mid-market software companies often struggle to understand. A good win/loss analysis report will provide the answers to these questions.

Three Reasons Sales Leaders Want Even More Data About Their Buyers

While B2B sales teams are literally swimming in data, it’s not enough. Data-driven sales leaders are using input directly from buyers to find new ways to improve win rate and lead with conviction.