AI transcription of win/loss interviews can be one-tenth the cost and 96% faster than traditional human transcription.

But for complex technology sales, AI-powered transcription services often fall short, requiring additional cleanup for polished, readable transcripts. That’s what our team at Growth Velocity ran into when we tried several automated services last year.

In light of recent advancements in the AI space, we wondered: Had automated transcription services since improved?

To find out, we compared two human-based transcription services and four machine-powered ones, paying special attention to accuracy, readability, and confidentiality.

In our test, AI won. Below, we explain the details of our experiment and how we reached this verdict.

Background

What is automatic speech recognition (ASR)?

Recent discussion of artificial intelligence has largely focused on generative AI—the AI techniques that produce novel content based on existing data. But perhaps just as prevalent is speech recognition technology, also known as automatic speech recognition (ASR).

ASR falls into another subset of AI that focuses on converting spoken language into written text. And it’s everywhere now, from mobile dictation to Zoom call transcriptions to smart speakers like Alexa and Sonos.

In fact, many of the leading cloud and AI providers now offer ASR systems, including Whisper from OpenAI, Google Cloud’s Speech-to-Text API, IBM Watson Speech to Text, and Azure AI’s Speech service from Microsoft.

ASR for Win/Loss Interviews

In our work at Growth Velocity, ASR shows great potential for expediting the win/loss interview transcription process for as low as a few cents per minute of audio. Where a human might take one or two days to turn around a 45-minute interview, AI can get the job done in less than an hour—even minutes.

Just one problem: AI struggles with complex topics, often failing to recognize specialized words and phrases. As a result, these terms are transcribed inaccurately, making manual cleanup necessary.

This is the use case for Growth Velocity. We use Win/Loss Analysis to understand the decision-making processes of buyers in industries where technical jargon is often the norm. So whether man- or machine-powered, run-of-the-mill transcription services simply can’t keep up.

Man vs. Machine: Our Test of Human-Based and AI-Powered Transcription Services

In our past experience, AI services could produce only a basic interview transcript at best, one that required time consuming edits before it could be usable. But with AI making great headway in the last year, we got curious—had automated transcription improved?

To find out, we decided to compare six transcription services: two human-based and four AI-powered.

Transcription Services We Tested

| Human Transcription Services | AI Transcription Services |

|---|---|

| A boutique transcription service for market research | Sonix.ai |

| An ASR service using a combination of Google Cloud’s Speech-to-Text API and human editors | Machine Express from TranscribeMe |

| Rev Max | |

| Otter.ai |

Note that we have signed NDAs with both of the human-based services, so we haven’t disclosed these business names.

We defined the human-based transcription services as those that had any human element in the editorial process, while AI services were purely machine-generated.

To compare their quality, we fed each of them the same audio: a one-hour interview with a buyer in a complex sale. Interested in how AI would perform, a client graciously let us use the interview for this test.

We then judged the transcription results based on three criteria:

-

- Accuracy

- Readability

- Confidentiality

Accuracy

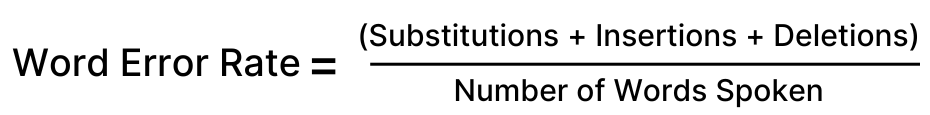

How many transcription mistakes did the service make? We used word error rate (WER), the number of errors divided by the total words spoken, to measure accuracy.

WER defines three types of errors: substitutions (misspellings and incorrectly transcribed words), insertions (words that were included in the transcript but not in the original audio), and deletions (words omitted from the transcript but present in the audio).

In our work with B2B technology buyers, transcription services often stumble over industry terminology and tech lingo. So to improve their recognition and accuracy, most services offer custom dictionaries. Still, 100% accuracy is rare—simple misspellings, like from homophones and unique pronunciations, remain possible.

In either case, these errors distract from the key points of an interview. They also raise skepticism of whether what was transcribed was actually said.

Below is a detailed breakdown of WER scores for the six transcription services we tested. These results are based on 1,199 words of audio.

Word Error Rate Test Results

| Service | Substitutions | Insertions | Deletions | Total Errors | WER |

|---|---|---|---|---|---|

| Boutique transcription service | 3 | 1 | 50 | 54 | 4.5% |

| Combination ASR / human editing service | 13 | 19 | 15 | 47 | 3.9% |

| Otter.ai | 57 | 0 | 18 | 75 | 6.3% |

| Rev Max | 18 | 7 | 33 | 58 | 4.8% |

| Sonix.ai | 13 | 2 | 22 | 37 | 3.1% |

| TranscribeMe Machine Express | 30 | 4 | 22 | 56 | 4.7% |

Readability

Readability describes how easily a piece of text can be understood. When content readability is low, it’s hard to understand its meaning—readers spend time trying to parse big words and complex sentences.

In transcription specifically, the biggest challenges in readability revolve around detecting when a sentence ends and recording the sounds and filler words common in natural speech, like “um” and “uh.” This can result in long, run-on sentences that make a piece of text hard to understand.

To gauge each service’s readability in our test, we used the Flesch Reading-Ease test, a formula that takes into account sentence length and word length. Here’s how its scoring system, based on a scale of 0 to 100, works:

-

- 90-100: Very easy (5th-grade level or lower)

- 80-89: Easy (6th-grade level)

- 70-79: Fairly easy (7th-grade level)

- 60-69: Standard (8th-9th-grade level)

- 50-59: Fairly difficult (10th-12th-grade levell)

- 30-49: Difficult (college level)

- 0-29: Very or extremely difficult (college graduate level)

Confidentiality

In our win/loss interviews, buyers share detailed feedback about specific vendors. This often includes sensitive business information. So to protect the privacy and interests of all parties involved, it’s important to treat the information shared in our interviews as confidential.

Naturally, the need for confidentiality varies. For example, transactional sales often require less sensitivity. However, for our clients with complex sales to mid-market and enterprise companies, non-disclosure is a must.

To judge each service’s level of confidentiality, we looked at their policies and practices for security and privacy. We wanted to see that there are policies and technologies in place to reduce the possibility of data being shared unintentionally.

The services that ranked highly in confidentiality were those that had clear policies and technologies preventing unintentional sharing of customer data.

In the case of the AI transcription services, we were particularly interested in whether a vendor’s policies permitted other uses of our data. For example, using customer data to train their AI models.

Results

Between man and machine, which team did better?

With the lowest error rate (3.1%), second best readability score (77.25), and strong data privacy policies, the AI-powered Sonix.ai got the gold medal.

Here’s how our test played out:

Win/Loss Transcription Comparison Table

| Service | Cost (per minute) | Accuracy (WER) | Flesch Reading-Ease | Confidentiality |

|---|---|---|---|---|

| Boutique transcription service | $2.50 | 4.5% | 70.78 | High |

| Combination ASR/human editing service | $2.00 | 3.9% | 73.02 | High |

| Otter.ai (Pro) | $0.01 | 6.3% | 66.3 | Low |

| Rev Max | $0.25 | 4.8% | 75.78 | Low |

| Sonix.ai | $0.17 | 3.1% | 77.25 | High |

| TranscribeMe Machine Express | $0.07 | 4.7% | 80.02 | Low |

Below, we’ll go through each service’s performance in more detail, as well as their cost, turnaround, and glossary offerings.

Boutique transcription service

- Cost: $2.50 per minute of audio

- Turnaround: Next day delivery

As one of our human-based options, we chose an onshore transcription service that specializes in market research. Its team includes professional typists with at least a decade-long tenure and who offer an unlimited custom glossary. Most reassuring was the service’s strong data privacy practices, which included NDAs and DPAs with clients and file exchange through the secure file sharing service Sharefile.com. The service also deletes files after transcription.

This service’s transcription landed in the middle of the pack with the third best WER (4.5%) but second worst readability score (70.78). That said, these ratings don’t fully capture the transcript’s overall positive reading experience and the editorial decisions made to achieve it.

Specifically, we observed:

-

- Of all services tested, this transcript had the fewest substitutions and insertions.

- The total number of errors (54) is skewed because some omissions were purposefully done to improve readability—for example, disfluencies and filler words like “you know” and “like.” Without these, the total number of deletions is 44, as opposed to 50.

- Adjusted for these errors, this transcript’s WER is 4.0%, as opposed to 4.5%.

- To indicate pauses in the interview, the typists used commas rather than periods. Doing so created longer sentences, a strike against the Flesch Reading-Ease test.

- Long word strings were also broken into sentences in a readable way.

- When a speaker quoted someone, the transcript accurately captured this with quotation marks—a detail that Flesch Reading-Ease does not take into account.

Combination ASR/human editing service

-

- Cost: $2.00 per minute of audio

- Turnaround: 2 days

The second human-based transcription service we tested used a hybrid model: one combining human editors with either Google’s Speech-to-Text technology or Whisper from OpenAI. Like the fully human transcription service, it offers an unlimited glossary. It also follows strong data privacy measures, using secure file transfer protocols and NDAs with clients, employees, and independent contractors.

By default, Speech-to-Text from Google does not log project data, only data for projects with data logging enabled. For our test, we chose not to log data with Google. However, unless otherwise requested, the transcription service itself (separate from Google Cloud) holds onto client project data for 36 months.

Considering that the combination service involves human editors, we expected a high rate of accuracy, or at least comparable to the other human-based option. Indeed, its transcript had the second best error rate (3.9%), although across all services, it inserted extra words most often.

Again, in line with the other human-based option, the combination service’s transcript scored similarly in readability, at 73.02. Though highly readable, it missed a few sentence breaks and did not include quotation marks when a speaker quoted another person.

Otter.ai

Otter.ai is the AI-powered transcription company that’s also known for its live captioning feature. Its free Basic plan includes a custom vocabulary of five words or phrases, while the Pro plan allows up to 100 names and 100 other vocabularies. The Business plan gives teams significantly more: 800 names and 800 other vocabularies. For our test, we used the Pro plan.

Of all six services, Otter.ai had by far the worst WER (6.3%)—1.5 percentage points above the second worst. Many common words were substituted, including:

-

- “Weave” → “we’ve”

- “Third” → “search”

- “Quotes” → “quotas”

- “Gonna” → “going to”

And despite its custom glossary, Otter.ai still occasionally missed trade names in our transcript.

Besides scoring worst in accuracy, Otter.ai also scored lowest in terms of readability. Its biggest issue: run-on sentences. Instead of inserting periods, it used commas, creating extremely long sentences. While Otter.ai could clearly identify different speakers, it organized their dialogue based on their separate talking turns—meaning one continuous block of text if anyone spoke at length.

In terms of confidentiality, Otter.ai also misses the mark. You can delete your conversations and the software will permanently purge them. However, according to its terms of service, Otter.ai has “a worldwide, non-exclusive, royalty-free, fully paid right and license (with the right to sublicense)” to use your content.

Rev Max

-

- Cost: $0.25 per minute of audio

- Turnaround: five minutes

Calling itself the “#1 speech-to-text service in the world,” Rev offers both automated and human transcription services. For our test, we used Rev Max, its subscription offering that claims 90% accuracy.

Following Otter.ai, Rev Max’s transcript had the second most errors, with a WER of 4.8%. Although the software offers a custom glossary of 1,000 phrases, the transcription still missed the occasional trade name.

Of the AI-powered services specifically, Rev Max’s transcript had the most deletions—although this may be in part because it excludes disfluencies and filler words like “like” and “you know” by default. This may also be why, among the six services, its readability score (75.78) was above average. Since the Flesch Reading-Ease test looks at sentence length, these omissions probably helped.

Its Flesch Reading-Ease score aside, Rev Max’s transcript was moderately readable. It separated dialogue into paragraphs by speaker or if someone’s speaking time lasted more than a minute. It also broke conversational word strings into small sentences in a way that the speaker did not intend but that prevented long run-ons. However, overall, the transcript had poor punctuation and did not add quotation marks when speakers quoted someone.

Like Otter.ai, Rev’s terms of service stipulate it has “a fully paid-up, royalty-free, worldwide, non-exclusive right and license” to use your data. That includes training its own ASR technology with your content.

Sonix.ai

-

- Cost: $0.17 per minute of audio

- Turnaround: “15 minute file, it should be transcribed in roughly 15 minutes”

Neither the cheapest nor the most expensive service, Sonix.ai is an automated transcription software that offers both pay-as-you-go and subscription plans. It provides a network of transcriptionists across the globe that can clean up your Sonix transcripts—effectively creating a hybrid model like the combination service described earlier. However, for this test, we used only its AI features in the Standard pay-as-you-go plan.

Impressively, Sonix.ai’s transcript had the lowest WER (3.1%). Although it confused one instance of “their” and “they’re,” it notably did not miss any trade names added to the custom glossary.

The transcript was also highly readable since it separated dialogue based on speaker and broke up strings of words into sentences rather than outputting them as long run-ons. Occasionally, however, this also included breaking a single sentence into two.

Setting Sonix.ai far apart from the other AI services, however, is its approach to data and privacy. Unlike Otter.ai, TranscribeMe, and Rev, Sonix doesn’t require its customers to give a “worldwide, non-exclusive, royalty-free, fully paid right and license.” Further, it doesn’t use or share any data without customers’ express permission—and if customers delete data from the platform, it’s removed completely.

TranscribeMe Machine Express

-

- Cost: $0.07 per minute of audio

- Turnaround: “typically within 1 x of the duration of the audio file”

TranscribeMe is a transcription company that offers both human and computer-generated transcripts. Its human transcription work relies on freelancers from around the world, and comes with three pricing options ranging from $0.79 to $2.00 per minute of audio. For our test, however, we used TranscribeMe’s more affordable ASR option known as Machine Express.

Of the six services tested, Machine Express had the third worst error rate (4.7%) yet highest readability score (80.02). We suspect this is because like Rev, Machine Express omits filler words such as “like” and “you know” by default. As a result, its transcript had shorter sentences—an important factor in the Flesch Reading-Ease test.

Despite its high Flesch Reading-Ease score, however, we found this transcript the hardest to read. Part of this was its spelling errors. Since Machine Express doesn’t provide a custom glossary, many trade names and industry-specific terms were misspelled. In addition, it missed several common words, including:

-

- “Search” → “third”

- “Vendor” → “fender”

- “High” → “pie”

Hurting readability further was also the transcript’s poor formatting. Instead of separating dialogue by speaker, the AI did so in 30-second intervals. As a result, whenever someone spoke for more than 30 seconds, their speech was broken into two or more paragraphs. In theory, this makes for a more visual format compared to one continuous block of text. However, this formatting made the text less coherent, since a single topic could be spread out over multiple paragraphs.

Finally, from a confidentiality perspective, Machine Express resembles Otter.ai and Rev Max. Using its services grants TranscribeMe—and its affiliated companies, like Stenograph—non-exclusive rights to your content.

Conclusion

When we first tested them last year, ASR services produced basic transcripts of our win/loss interviews—ones in need of heavy cleanup. However, our recent test of six transcription services surprised us, with the AI-powered Sonix.ai coming out on top.

What do the results mean for us?

Human transcriptionists have long been our go-to at Growth Velocity—and while AI-powered services offer advantages in cost and accuracy, we’re not switching over right away. In fact, we expect that human transcriptionists still provide the most value for interviews on more technical topics and that involve non-native English speakers.

However, moving forward, our door has once again opened for AI transcription. Our team will continue testing Sonix.ai on more complex interviews—and we expect that this is just the start of exciting developments in ASR.

Related Posts

Win/Loss Analysis Reports: 3 Things You’ll Learn

Which aspects of your product offering and buying experience are helping drive sales? And which aspects are hindering sales? This is what enterprise and mid-market software companies often struggle to understand. A good win/loss analysis report will provide the answers to these questions.

Three Reasons Sales Leaders Want Even More Data About Their Buyers

While B2B sales teams are literally swimming in data, it’s not enough. Data-driven sales leaders are using input directly from buyers to find new ways to improve win rate and lead with conviction.

Data Driven Targeting And Messaging Increases Profitability

Sales and marketing are typically the largest area of spend for an expansion stage company. Marketing leaders can support increased profitability by reassessing who the strongest buyers are, and developing innovative and data driven ways to target them.